What is Apache Spark?

It is an open-source distributed cluster-computing framework. Spark is a data processing engine. This is developed to provide faster and easy-to-use analytics. This is when compared with the Hadoop MapReduce. First, it was under the control of University of California, Berkeley’s AMP Lab. Then Apache Software Foundation took possession of the Spark.

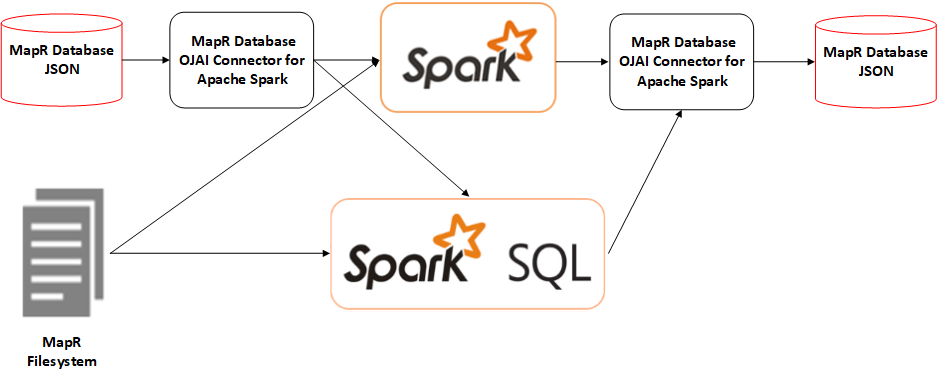

Components of Spark:

Spark consists of various libraries, APIs, databases, etc. The main components of Apache Spark are as follows. They are

- Spark Core:

Spare Core is the basic building block of Spark. It will include the following. They are,

-

- All components for job scheduling.

- Performing various memory operations.

- Fault tolerance, and more.

Spark Core is also home to the API that consists of RDD. Moreover, Spark Core will provide APIs. Especially for building as well as manipulating data in RDD.

- Spark SQL:

Apache Spark works with the unstructured data using its ‘go to’ tool, Spark SQL. Spark SQL allows querying data via SQL. Also, via Apache Hive’s form of SQL called Hive Query Language (HQL). It will also support data from various sources. This is like parse tables, log files, JSON, etc. Spark SQL allows programmers to combine SQL queries. Especially with programmable changes. Also, manipulations supported by RDD in Python, Java, Scala and R.

- Spark Streaming

Spark Streaming processes live streams of data. Data generated by various sources will process at very instant. This is possible with the help of the Spark Streaming. Examples of this data include log files. Messages containing status updates posted by users, etc.

- GraphX:

GraphX is Apache Spark’s library. It is for enhancing graphs and enabling graph-parallel computation. Apache Spark will include several graph algorithms. This will help users in simplifying graph analytics.

- MLlib:

Apache Spark comes up with a library containing common ML services. We call it as the MLlib. It will provide various types of ML algorithms including the following. They are,

-

- Regression.

- Clustering.

- Classification.

This can perform various operations on data. This is to get meaningful insights out of it.

Applications on Apache Spark:

Spark is a widely used technology adopted by most of the industries. Following are some of the Apache Spark apps which are very useful. These apps are widely useful to the industries in the current scenarios. They are,

- Machine Learning:

Apache Spark can equip with scalable Machine Learning Library. We will call it as the MLlib. This can perform the advanced analytics. Some of them are clustering, classification, etc. Prominent analytics jobs like predictive analysis, customer segmentation, etc. They will make Spark an intelligent technology.

- Fog computing:

With the influx of big data concepts, IoT has acquired a prominent space. This is for the invention of more advanced technologies. Based on the theory of connecting the digital devices. Especially, with the help of the small sensors. This technology will deal with humongous amount of data emanating. It is from numerous mediums. This requires parallel processing. It is certainly not possible with the cloud computing. Therefore, The Fog computing will decentralize the data. Also, storage will use the Spark streaming. This will be the finest solution to this problem.

- Event detection:

The feature of Spark streaming will allow the industry to keep track. It will help them to track the rare as well as unusual behaviors. Especially for protecting the system. Institutions like financial, security as well as health use triggers. This is to detect the potential risk.

- Interactive analysis:

Apache Spark can support interactive analysis. This is the most notable features of the Apache Spark. Unlike MapReduce that will support batch processing. The Apache Spark will process the data faster. This is because of which it can process the exploratory queries. It is possible without the help of the sampling.

Few popular companies that are using Apache Spark are as follows. They are,

- Uber.

- Pinterest.

- Conviva.

This is a very easy article that will explain you how to install the Spark. This is especially in your Windows PC without using Docker.

By the end of the article you will able to use Spark. This is possible either with Scala or Python. Here we are going to achieve this in 5 simple steps.

Before we begin:

It is very important that you replace all the paths. That will include the folder “Program Files” or “Program Files (x86)”. This is possible as explained below. It is to avoid future problems when running Spark.

If you have Java already installed, you still need to fix the JAVA_HOME & PATH variables.

- Replace “Program Files” with “Progra~1”

- Replace “Program Files (x86)” with “Progra~2”

- Example: “C:\Program FIles\Java\jdk1.8.0_161” –> “C:\Progra~1\Java\jdk1.8.0_161”

1. Prerequisite — Java 8:

Before you start make sure you have Java 8 installed. Also, the environment variables correctly defined.

- Download Java JDK 8 from Java’s official website.

- Set the following environment variables:

- JAVA_HOME = C:\Progra~1\Java\jdk1.8.0_161

- PATH += C:\Progra~1\Java\jdk1.8.0_161\bin

- Optional: _JAVA_OPTIONS = -Xmx512M -Xms512M. This is to avoid common Java Heap Memory problems with Spark.

Progra~1 is the shortened path for “Program Files”.

2. Spark: Download and Install:

- Download Spark from Spark’s official website

- Choose the newest release (2.3.0 in my case)

- Choose the newest package type. This will Pre-built for Hadoop 2.7 or later in my case.

- Download the .tgz file

- Extract the .tgz file into D:\Spark

In this article I am using my D drive. But obviously you can use the C drive also.

- Set the environment variables:

- SPARK_HOME = D:\Spark\spark-2.3.0-bin-hadoop2.7

- PATH += D:\Spark\spark-2.3.0-bin-hadoop2.7\bin

3. Spark: Some more stuff (winutils)

- Download winutils.exe from here from the official website.

- Choose the same version as the package type you choose for the Spark .tgz file. You can choose in section 2 “Spark: Download and Install”

- You need to navigate inside the hadoop-X.X.X folder. Also, inside the bin folder you will find winutils.exe

- If you chose the same version as me (hadoop-2.7.1) then the direct link is available in website.

- Move the winutils.exe file to the bin folder inside SPARK_HOME.

- In my case: D:\Spark\spark-2.3.0-bin-hadoop2.7\bin

- Set the following environment variable. This is to be the same as SPARK_HOME.

- HADOOP_HOME = D:\Spark\spark-2.3.0-bin-hadoop2.7

4. Optional: Some tweaks to avoid future errors

This step is optional. But I highly recommend you do it. It fixed some bugs I had after installing Spark.

Hive Permissions Bug:

- Create the folder D:\tmp\hive

- Execute the following command in cmd. Then you started using the option Run as administrator.

- cmd> winutils.exe chmod -R 777 D:\tmp\hive

- Check the permissions.

- cmd> winutils.exe ls -F D:\tmp\hive

5. Optional: Install Scala

If you are planning on using Scala instead of Python for programming in Spark? Then follow this step:

- Download Scala from their official website

- Download the Scala binaries for Windows (scala-2.12.4.msi in my case)

- Install Scala from the .msi file

- Set the environment variables:

- SCALA_HOME = C:\Progra~2\scala

- PATH += C:\Progra~2\scala\bin

Progra~2 is the shortened path for “Program Files (x86)”.

- Check if scala is working by running the following command in the cmd

-

- cmd> scala -version

Testing Spark:

PySpark (Spark with Python):

Once we install the Spark successfully then we need to test. This is possible by running the following code from pyspark’s shell. You can ignore the WARN messages.

Scala-shell:

To test if Scala and Spark where successfully installed, run the following code. This is from the spark-shell. This is only if you installed Scala in your computer.

PD: The query will not work if you have more than one spark-shell instance open

PySpark with PyCharm (Python 3.x)

If you have PyCharm installed, you can also write a “Hello World” program. This is to test the PySpark.

PySpark with Jupyter Notebook:

You will need to use the findSpark package. This is to make a Spark Context available in your code. This package is not specific to Jupyter Notebook. You can use it in you IDE too.

Install as well as launch the notebook from the cmd:

Create a new Python notebook and write the following. This will be at the beginning of the script.

Now you can add your code to the bottom of the script. Then you can run the notebook.

I hope that you under how to install the spark. Especially in the windows platform in this article. You can install the spark using the steps given above. Then you can also test it. This is also mentioned above.